2005: Simulations from a Research Project by Judith Radloff, Honours 2004, entitled “Obtaining Bidirectional Texture Reflectance of Real-World Surfaces by means of a Kaleidoscope” represented by jelly babies.

2006: artificially textured three-dimensional computer graphic images generated by the Virtual Reality and Computer Graphics research group under the leadership of Shaun Bangay.

2007: rendered images of the Mandelbrot Set, generated by Greg Atkinson as part of his 2006 Honours project research into distributed grid computing.

2008: Logic operation lookup tables implemented as textures used in high performance encryption on a graphics card. Images generated by Nicholas Pilkington as part of his 2007 Honours project investigating General purpose computing on Graphics hardware.

2009: The cover shows a montage of images generated by Barry Irwin and the Security and Networks research Group. The Globe in the background is a rendered Hilbert fractal plot of malicious traffic collected over the last three years. In the foreground is a representation of the same traffic represented in a three-dimensional ‘cube of doom’ - showing three particularly aggressive networks.

2010: The cover shows images generated by Benji Euvrard. Conventional digital photographs capture only a small portion of the range of light intensities present in most scenes, leaving some regions either under- or overexposed. High dynamic range (HDR) images avoid this problem by combining several images taken with different exposures. Current display devices are unable to accurately reproduce the extra detail present in HDR images, requiring that such images be distorted using tone-mapping operations (such as in the image of the Hamilton building shown on the cover) in order to reveal the extra detail. These images were taken as part of an Honours project which developed a novel alternative strategy to accurately print HDR imagery.

2011: The cover is made up of a montage of images produced by Barry Irwin and Bradley Cowie. These images are heatmaps of various aspects of the traffic observed on the Network Telescope sensor, between 2007and 2009, operated by the Security and Networks Research Group. As a rule of thumb warmer colours indicate larger values, with the colour ranging from dark blue to dark red, over the HSV colourspace. White and black indicate differing kinds of missing data from the datasets.

2012: The pictures used for the cover are part of a collection of images taken over the years in the Mbashe municipality, near Dwesa, where the Telkom Centre of Excellence at Rhodes, together with the one at Fort Hare, has an experimental site, which was established at the beginning of 2006.

The site, known as the Siyakhula Living Lab, realizes an internet-enabled ‘broadband island’ through sixteen points-of-presence located in schools but open to the rest of the community. The purpose of the site is to study effective ways of introducing ICTs in areas lacking them, in such a manner that they are sustainable and useful.

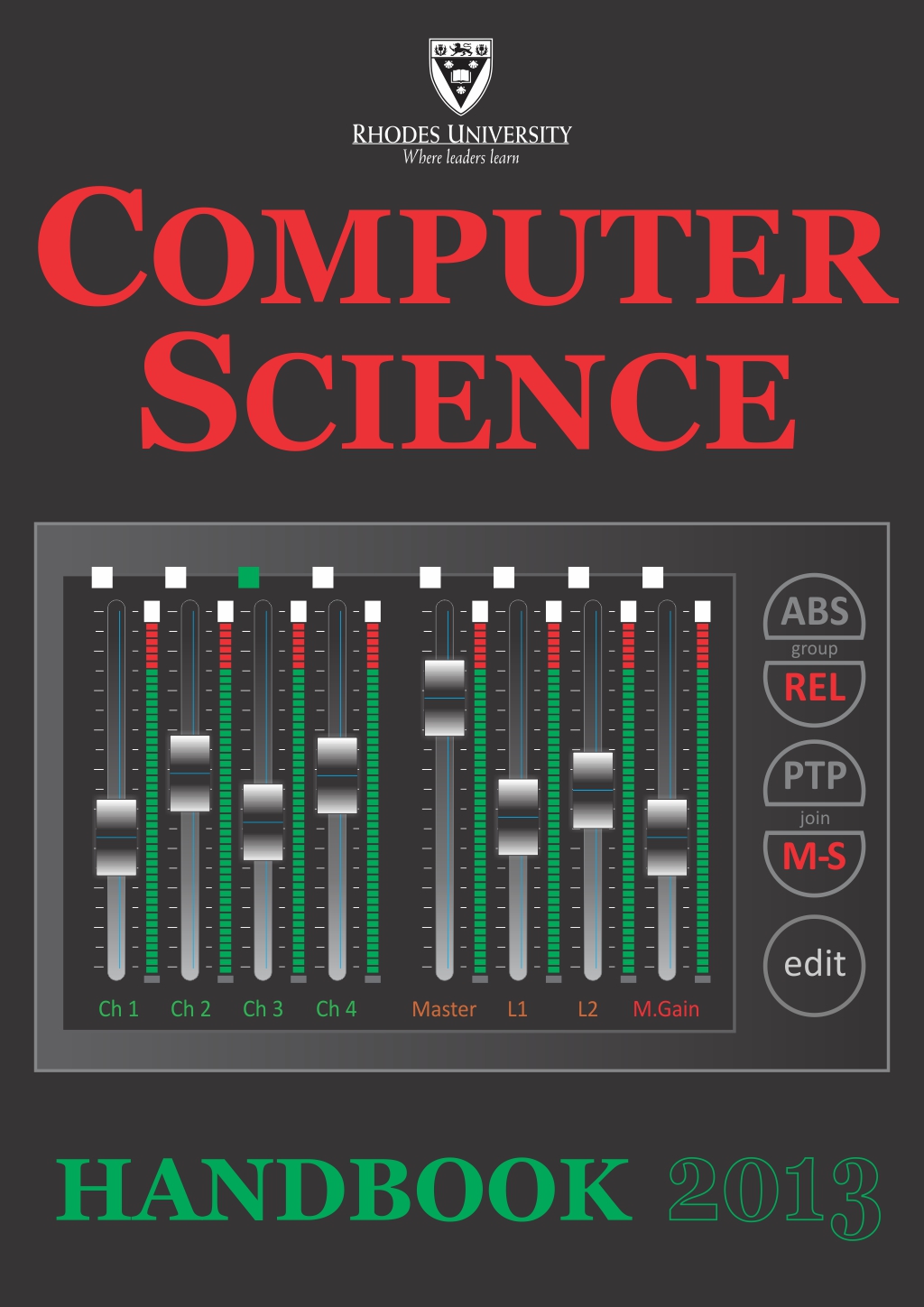

2013: The graphic display on the cover was created by Nyasha Chigwamba, a PhD student in the department, in collaboration with the German based company, UMAN. It shows a number of volume control faders, which control the volume of audio channels on local or networked devices. These faders can be grouped together in peer to peer or master/slave relationships, such that a single fader move can cause synchronized movement of other faders in the group.

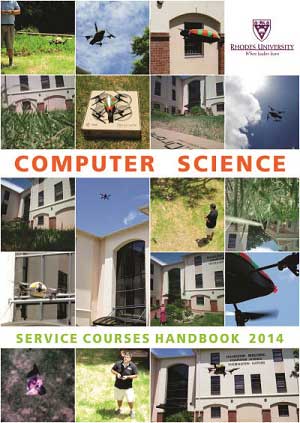

2014: There are lots of applications for unmanned aerial vehicles (UAV) globally. Local applications cover a wide range of situations, from mapping informal settlements to guarding against the dangers of floods and fires, to joining the fight against poaching. These tasks can be done even more effectively if the UAVs can also be autonomous. The cover depicts a collage of images of an UAV used by researchers within the department to do exactly that. Using various image processing algorithms, the UAV has taken the first baby steps towards autonomous flight.

2015: The cover consists of six images produced by Alastair Nottingham as part of his PhD research. These images are a visualized output of six alternative parallel shuffle operation execution patterns to rearrange data stored in coalesced but out-of-order caches across thirty-two threads on an Nvidia GPU, using the CUDA framework. Each distinct parallel shuffle operation is assigned a unique number and colour. Higher operational numbers in a graph indicate high computational overhead, while smaller fragmentation periods require additional distinct high-latency memory transactions. The visualized process applies to a low-level GPU caching mechanism that greatly accelerates access to sparsely distributed and uncoalescable records in high-latency GPU device memory.

2016: An Optical Coherence Tomography scan showing a single slice through a human finger. The region between the red and green lines is the papillary junction, where the epidermis attaches to the dermis and the fingerprint is formed. This fingerprint is reflected on the surface of the skin (thicker green line).

-

2017: A selection of images from the SKA funded project completed by Antonio Peters to reduce the effect of noises in images from radio telescopes. Clockwise from top left: radio telescope, tessellated image for correction sensitivity pattern of antenna, and an uncorrected image.

2018: The cover photograph is derived from an Honours project implemented by Kyle Marais. The project enabled control over immersive sound via an Oculus Rift Head Mounted Display and Leap Motion gesture controller. The display shows a head reaching for a ball that represents a sound source. As the user moves their hand in space, the displayed hand will move. The user can take hold of the sound source and move it in three dimensional space. As the displayed sound source is moved, so its associated sound will move about space.

2019: Test flight of DJI Mavic quadcopter currently being used in research on identifying alien invasive vegetation. Software developed for the drone is able to identify the invasive Hakea shrub using machine learning algorithms applied to images taken on the fly.

2020: The front cover image is a visualisation of a piece of music. The music is translated from the analogue audio domain into digital information, which is then analysed and processed in various ways to produce an image that is the representation of the original sound. The cover image was produced by Corby Langley in 2019, as part of his Honours research project. It represents a piece of music entitled 203a - Song of Virgins. The colours represent the frequency/pitch of the note; loops in the image represent repeating sections of music (such as verses and choruses).

2021: Between 2018 and 2020, a research study by Katherine James was conducted to ascertain the success with which drone imagery could be used in conjunction with various image processing techniques and deep learning algorithms to identify instances of an invasive shrub. Images on the front cover show the results of applying various vegetation indices to an original drone image depicted in the top left (and duplicated in the bottom right) of the page to highlight instances of this shrub. Images on the back cover show the results of the inference performed by the drone in recognising instances of the target shrub on a sunny day with evidence of shadows. Alternate rows show the input drone images and the processed output images from the classification process showing target class pixels in yellowish-green and non-target class pixels in purple.

2022: The cover of this year’s Handbook was inspired by an Honours research project conducted by Keagan Ellenberger in 2021, which looked at finding point sources in imperfect astronomical images from radio-telescopes (the original images from Keagan’s research are shown on the back cover). The project is a good example of cross-disciplinary research (involving Physics and Computer Science in this case). These images show how the original signal source is blurred by interference from the atmosphere and other radio-frequency sources, leading to the image captured by a ratio-telescope being “dirty”.

2023: The cover image depicts events in League of Legends, a Multiplayer Online Battle Arena video game.

Jonah Bischof used machine learning techniques to build a simple but capable AI for recommending moves to a novice player. Two five-player teams—blue and red/orange—compete. Each team has its own base, which is divided by the central, cyan-coloured river. Outperforming the enemy in one of the three lanes and completing objectives like destroying towers and monsters usually achieves the main goal—destroying the enemy base. Beginners struggle to perform 200 actions per minute like pros. Therefore, the AI assigns player roles based on predefined objectives. It simplifies their moves based on how professionals play in a specific 7 x 7 quadrant of the map, reducing the millions of possibilities to a manageable number. The system correctly predicted moves in an unseen game.

2022: This image visualises learned features using activation mapping, showcasing the effectiveness of super-resolution via stable diffusion and a customised convolutional algorithm in a new, fully-fledged licence plate detection and classification system. Implemented by MSc student Alden Boby, the system achieves state-of-the-art results in vehicle searches and reidentification across multiple camera feeds.